I designed a new, intuitive information architecture of the third largest US brokerage firm’s online platform.

Role: Lead UX Researcher

Process: Design Study | Launch Study | Analyze Results

Method: Card Sort

Solution: Utilizing optimal workshop for an unmoderated card sort study, I evaluated mental models of brokerage customers to establish information architecture that served the basis for a new navigational structure on Wells Fargo Advisors’ online platform.

Project Background

Wells Fargo Advisors (WFA) released their online brokerage platform in 1999 (legacy platform). This platform is where WFA customers manage their investment portfolios and interact with financial markets online. In 2015, myself and my design team released the platform’s first ever redesign (new platform).

With no architectural updates over 15 years of functional additions, the legacy platform become bloated and unintuitive. As one user described during usability testing, the navigation of the legacy platform was a “confusing cacophony of tabbage”. To solve this problem and determine an information architecture (IA) for the new platform, I conducted many studies, including a card sort.

Determining Cards

My first step in designing a card sort study was to reconcile the legacy platform IA. This helped determine which cards to include in my study. The legacy platform had approximately 80 webpages, which would be too many cards for the study. Having over 30 cards would cause participant exhaustion and reduce result validity.

To reconcile the legacy platform IA, I documented each webpage into a spreadsheet, grouped functionally related webpages together, and added web traffic data. Using this data, I isolated 30 cards to represent the breadth online brokerage features and the platform’s most important content. I also verified that these cards helped inform key IA questions I had. These questions came from prior usability testing I conducted on the legacy platform’s navigation. For example, I had a question regarding how users think about a financial plan - do they associate a plan with their investment portfolio or as related to the planning tools they used to create it?

With cards for the study determined, I wrote short descriptions for each card. These descriptions ensured participants sorted cards based on meaning and not potentially inaccurate interpretations of terminology.

Study Protocol

I wrote an introductory survey for the card sort so I could parse results based on factors I hypothesized may impact our user’s mental models. Factors included the user’s frequency of online brokerage activity, which online brokerage features they use, and their client preference model (CPM). A CPM describes a persona for how a user manages their finances and includes:

• Delegators who trust financial professionals to manage their finances.

• Collaborators who manage their finances in collaboration with professionals.

• Collaborators who idependently manage their finances.

I conducted the card sort with two cohorts of 100 participants between closed sort and open sort methodologies. I based the closed sort categories on the legacy platform’s top level IA and I tweaked these categories based on preliminary user testing I conducted. We conducted a closed sort in order to to test a hypothesis we had for the best IA; however, we also conducted an open sort so we would have valuable insight in case our hypothesis for the closed sort was wrong.

I needed 200 participants (100 in closed sort and 100 in open sort) so I could nearly guarantee 15 (ideally 20) individuals matching the different factors in our analysis:

• Fequencty of Online Brokerage Activity: quarterly | monthly | weekly

• Typically Used Features: trading | market research | portfolio monitoring | financial planning | financial education | investment research

• Client Preferece Model: trading | delegator | collaborator | self-directed

The end of the card sort included an open-ended response where I prompted participants to outline any confusion and list challenging cards to sort and describe why. These responses revealed qualitative aspect that influenced cards without clear results from the study. Often, these responses clarified issues with terminology and allowed me to isolate noise from our the study results.

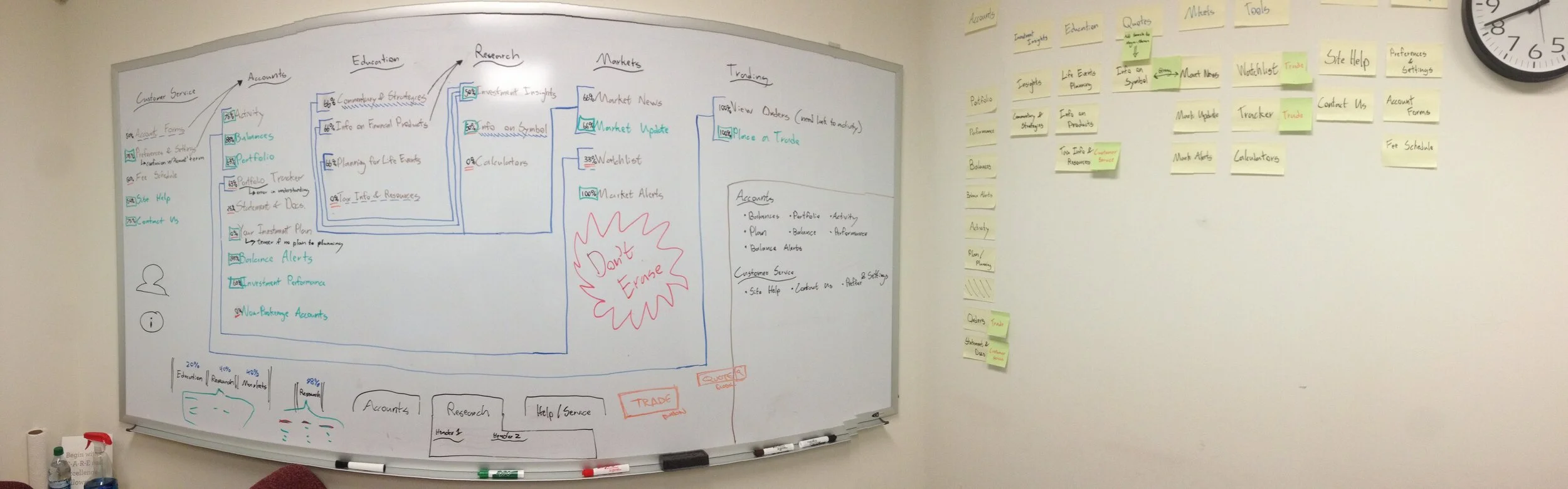

My first task in analyzing the card sort results was to determine differences between open and closed sort data. A significant difference would indicate that categories for open sort do not reflect the mental model of users for an online brokerage platform. This evaluation also helped me and the project team recognize features on the platform that, regardless of formal IA placement, should include contextual pathways for navigation between them. Below is an image from my analysis between closed and open sort results.

For my analysis, I organized cards into their most frequently categorized groups for the closed sort. I then determined cards were a minimum of 50% of open sort participants grouped them with other cards in its closed sort categorization. This indicated a strong closed sort categorization preference. I also determined which cards less than 30% of open sort participants grouped with other cards in its closed sort categorization. This indicated a weak closed sort categorization preference and suggested that a categorization not present in the closed sort may best match the users mental model. Finally, I made connections between cards with greater than 50% grouping in the open sort but in the same closed sort categorization. These connections indicated the potential need for broader top level IA categorizations and/or contextual navigation pathways between elements.

Beyond comparing closed and open sort results, I analyzed both cohorts against the factors I outlined earlier (frequency, typical use, and CPM). Optimal Workshop’s statistical and filtering toolsets were crucial to this process. Specifically, similarity matrices and dendrograms provided me with an ability to interpret the data in terms of design solutions and considerations for the new platform’s IA.

Ultimately, my findings from this card sort study were foundational in establishing the new platform IA. Primary finding that influenced my recommendations for IA solutions included:

• Contextualized Alerts: Our users want alerts to connected to the content they relate to and not as their own alert/notification section of the platform.

• Investment Plan as Portfolio Content: Instead of organizing an investment plan with features that create the plan, our users see an investment plan as an output from these tools that is a part of their investment portfolio.

• Frequency of Use Unimportant: Frequency of online brokerage use did not impact the results of card sorting.

• Discrepancies Removed with Typical-Use Data: By-in-large, when we filtered results by typically used features, variances in our results collapsed and a clear categorization arose.

• CPM Impaced Commentary & Strategies: The only card with CPM dependency was ‘Commentary & Strategies’ which collaborator participants categorized with education and delegators or self-directed participants categorized with research.

• Account Terminology Confusing: There is a conflict and confusion between the users' interpretation of an ‘account’ as related to online experiences versus what the brokerage industry regards as an ‘account’.

Education, Research, and Markets

The most significant finding from this study was the high occurrence of connection between education, research, and markets as closed sort categories. I found strong open sort connections between nearly every card of these closed sort categories. This suggested that the ideal IA may merge these categories at the navigations top level. Results showed that user confidence in various navigational tasks related to these categories would vastly improve by merging their top level navigation.

Ideally, I would have conducted a tree testing study to evaluate these two options; however, due to project timelines and limited resources this was not a possibility. Whereas a card sort study reveals mental models of users in order to inform the design of IA, a tree testing study can be used to evaluate the efficacy of a specific IA design. In lieu of tree testing, the project team proceeded with navigation designs aligned to a combined top level IA for education, research, and markets.